I’ve been exploring the Royal Photographic Society (RPS) collection with data visualisation (see here). After segmenting the data by photographic technique and themes, I was interested to see if I could trace visual characteristics across the photograph collection; this is not possible by relying solely on the information in our catalogue. The collection is partly digitised and, at the time of writing, I’ve been working with around 1,200 items with an image and date.

One way to explore an image collection visually is to use a machine-learning model to extract feature vectors from the images. Feature vectors are (multi-dimensional) numerical measures that describe the visual characteristics of an image. You can use these measures to quantify and compare the visual similarities, and dissimilarities, between images.

Training a machine-learning model can be very computationally intensive. But you may get decent results without the need for specialist hardware, by running a model that is already trained on similar data. (All the processing described here was done on my laptop).

I ran the RPS images through the VGG16 neural network (named for the Visual Geometry Group at Oxford University, who developed it), which is a convolutional neural network: a type of machine learning model particularly suited to image analysis. The model was already trained on the Imagenet database (over 14 million modern photographs) and I didn’t do any further retraining.

I was initially a little skeptical about how successful this technique would be with the RPS data. The images the model was trained on are dissimilar in a number of ways: the RPS collection contains a much greater proportion of portraits; it’s physically diverse in the way modern digital photography is not – containing glass slides, small cards, large prints (sometimes framed and mounted) – and the vast majority of images are black and white. (Though compared to photos of rare and obscure objects in the V&A digitised collection, these images are probably closer!).

Having run the RPS images through the model and extracted feature vectors for each, it’s possible to do a reverse image search (in the manner of ‘Search by Image’ on Google Images). Essentially I choose an image to search by and, by identifying the images with the closest feature vectors, retrieve the most visually similar according to this model.

The feature vectors VGG16 outputs have 4,096 dimensions, as if measuring 4,096 characteristics of every image. To speed up computation over them, you can reduce down the dimensions (I reduced them to 300) using a statistical process called Principal Component Analysis that aims to bring out patterns in data by prioritising the most significant differences.

The results were better than I was expecting. I’ve included examples below; in each case the search image is the larger top image and those below are the 5 images the model considers most similar.

Some of the results are obvious in a way: duplicates, sets of photographs from an album, and those by an individual photographer with a distinctive style.

Whereas in the examples above the similarity test produces quite predictable results, in other cases the model cuts across the collection – particularly when searching across popular photograph types.

The image below is a full-length portrait carte de visite (a small, widely-produced photograph type from the late 1800s). The search results are more standing portrait cartes de visite, but taken at different photography studios at different times in different places. Searching across the collection, the model picks up on conventions for staging portraits over this period.

An image’s structure can be the basis for similarity, such as these photographs all mounted in a circle/oval (including ‘The Head of John the Baptist on a Charger’!):

As can the image content – here, landscapes with trees.

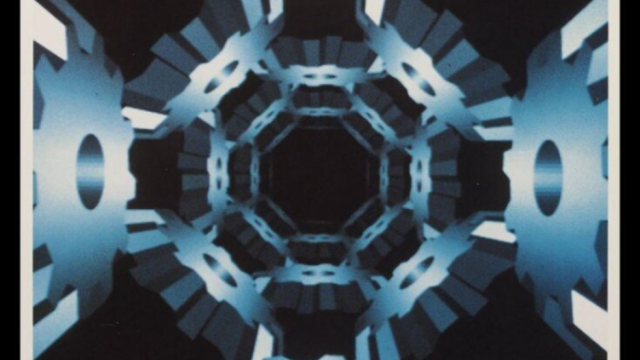

Of course, when there are no similar images in the collection, the model will fail to retreive any. See the results below (though conceivably, the image of lace [right] – an early, 1840s, salted paper print created by pioneer William Henry Fox Talbot – has a similar visual texture to the water beads.)

Neural networks are notorious for being difficult to interpret the way they work. (Though there have been recent attempts to address this). The search example below, a glass slide, is considered similar to other slides from the same set, but also to 2 not-obviously similar images – other than they all have borders. I wonder if the model is picking up on this structural aspect of the image?

And in some ways, that’s not a bad approach in this collection! The items are very physically diverse and the physical types can infer the image content. Many of the cartes de visite, for example, which have a characteristic narrow aspect ratio, are portraits. Could the neural network be hacking this characteristic of the collection to return convincing results?

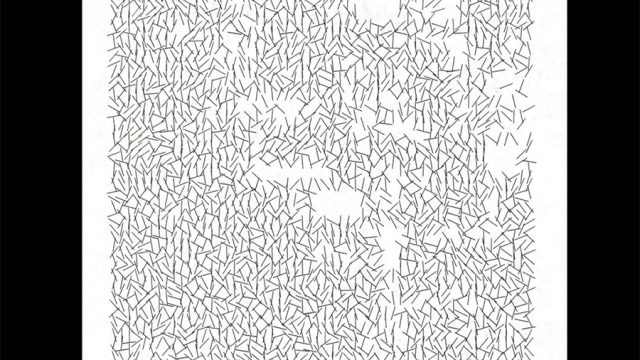

To try and overview how the model organises the collection, I can reduce the feature vector dimensions to 2 (using an algorithm called ‘t-sne’), losing some of the detail but allowing me to plot the results.

Direction has no meaning in this plot, but images considered similar will be close together. The layout looks slightly different every time you run the algorithm because there is an element of randomness in how the algorithm works.

Before dissecting this plot, what are the 2 clusters off on the left? Those are the daguerreotypes (a type of early photographic process) which, in the RPS collection, happen to be photographed with a colour chart. Machine learning models like this can pick up on this kind of accidental visual distinction. Because of the colour charts, the model considers the daguerreotypes alien from the the other images. And it further splits them into 2 distinct groups – colour chart placed to the side, or above/below – a meaningless difference (unless one is interested in colour calibration techniques!). Going forward, it would be worth cropping out the colour charts to avoid this.

Other than this accident, there seems to be some logic in how the model clusters the images (see annotations below).

Without having to retrain the model on historical photographs, this technique offers understandable, visual paths through the collection that were not possible before. When resources for cataloguing are limited, automated techniques like these can offer alternative ways of making sense of digitised collections.

In future work, it may be interesting to see if it’s possible to pull apart the different ways images can be visually similar; an image may have a circle mount, but you may be more interested by the image content than the circle shape.

Despite the apparent success of these methods, the difficulty in interpreting how the model works raises trust issues. Different audiences to collections have different requirements and expectations. For academics/researchers, trust and transparency in tools can be critical. As one historian said to me in a previous project: ‘when it comes to academic research … what I conclude from your tool feeds into my reputation’.

I’m now thinking about how I might apply this data in timeline visualisation. More to come soon…