This is a guest blog post by Anna Ridler, whose work, The Fall of the House of Usher, is displayed in Artificially Intelligent in Room 220.

The Fall of the House of Usher is a 12 minute animation where each still has been generated by a GAN (a type of AI) trained on my own drawings. It is a piece that could have been hand animated, but by choosing machine learning I was able to heighten and increase these themes around the role of the creator, the reciprocity between art and technology, and aspects of memory in a way that would not be available to me otherwise. I am interested in how the process of using artificial intelligence can be used to push ideas in a way that would not be possible otherwise.

When working with Artificial Intelligence there are two main materials: the data or training set used and the algorithm that is run to create the artificial intelligence. The algorithms that I use, and that I am particularly interested in are those that make GANs, or Generative Adversarial Networks. A GAN is a form of unsupervised machine learning invented in 2014, considered, in the machine learning world, to be notoriously unstable and not well understood – a complex, iterative process with many interdependencies. It is the process of two intelligences dancing around each other to make images and the unpredictable and unquantifiable results of the dynamics emerge. For the GAN to be constructed, one artificial intelligence network is trained on series of images (known as a training set) which it uses to try to create a realistic version of an image that could potentially come from that training set. The second AI looks at these created images and decides whether they are real or fake, true or counterfeit. As this second network is good at judging images that could not pass, the first AI learns to mimic imagery to the extent that the “the counterfeits are indistinguishable from the genuine articles” over the course of many cycles of learning, or epochs. The use of the word counterfeit is interesting, suggesting as it does a lack of truth. But the images that produced are not merely copies of what was in the training set, but rather entirely new images that have been created from the knowledge of what it has seen.

The training sets, or images that are given to them as input, provide the knowledge and are central to the eventual image output yet are rarely discussed. The datasets that are needed to make training sets are usually extremely large – thousands and thousands, sometimes millions, of images and inputs – and often proprietary. Whereas algorithms for machine learning are often open-sourced, even by large technology companies, the appropriate datasets that are needed to run them are not readily available. Most of these training sets that are open-sourced are compiled by researchers, often using mechanical turks (the invisible labour and associated power relations again rarely acknowledged) using various methodologies, but because people are always involved at some point in either the source content or in the process, they inevitably come to enshrine cultural or social attitudes, otherwise known as dataset bias. For example, ImageNet (a canonical database of over 14 million images often used) gives a narrow, conventional account of “beauty” – white, western, young – and for “monstrous” it not only pictures of Frankenstein that are shown but also pictures of disabled children. It is virtually impossible to look through every single image in a dataset this large, so the amount of control an artist has – what has been included or excluded, what biases and prejudices are being replicated and repeated – is very difficult to control. There is no certainty of a diverse and comprehensive vocabulary; the cultural, political or social biases of whatever groups of people that defined the training set will be exposed. Further, the scale that is needed means that it is extremely time-consuming and often costly to create a bespoke dataset, which can lock out experimentation or critique.

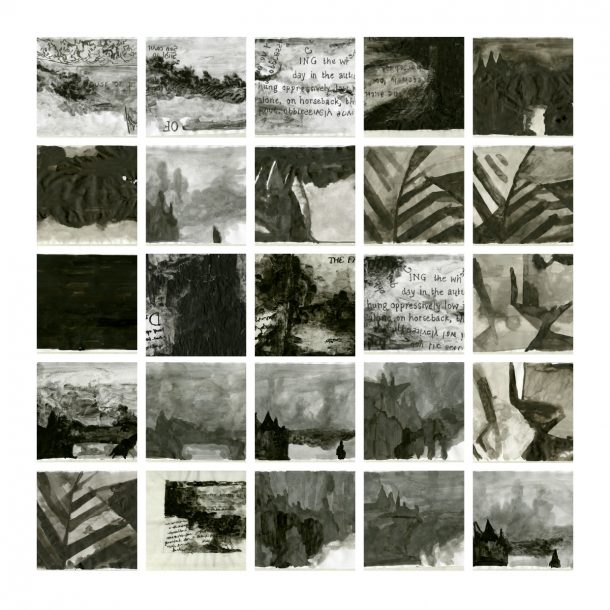

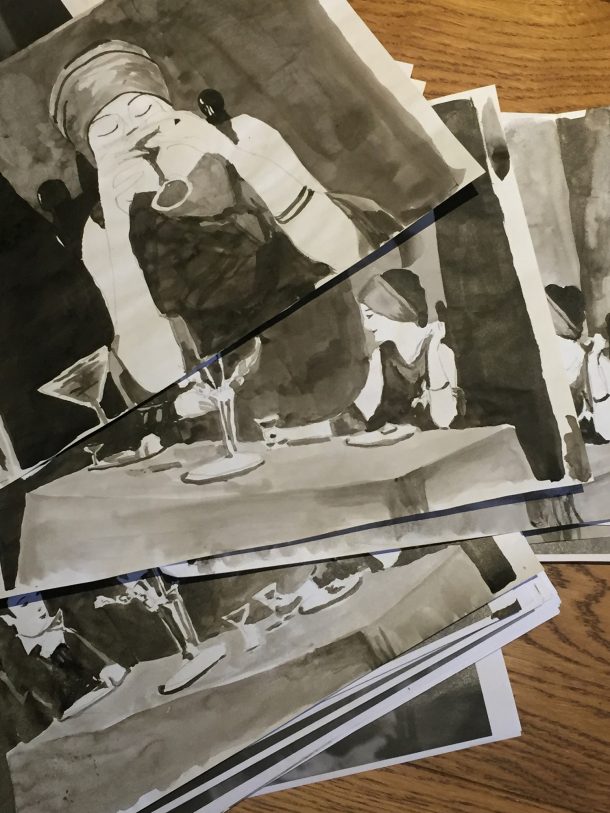

I am aware that the control that comes to me in this process really comes from what I do with the dataset. As someone who is interested in the hidden and the forgotten, it makes me uncomfortable to use someone else’s dataset without properly exploring it. By creating my own dataset, it forces me to examine each image and inverts the usual process for creating this type of dataset. To make my animation I made a training set of set of 200 drawings using the 1929 version of the Fall of the House of Usher as my base so that the GAN would essentially learn how to draw in my style. Drawing is interesting in the context of machine learning – drawing is the first language (you learn to draw before you learn to speak) so it seems appropriate to use this for emerging technology. It does three important things: records, gestures at the humanness behind it the image, and contains the process of editing (it is not just one point in time or one angle but an amalgamation of images, thoughts and memories). Chess used to be seen as essentially human, but now machines can do it this view has changed. Will the same thing happen to drawing? Already what drawing means is changing. William Kentridge, a South African visual artist, has written how “you can’t instantly create 400 drawings” but that is just not true anymore. Machine learning now allow us to do things that fifteen, twenty years ago would have been in the realm of fiction and I am very interested in using AI to push what drawing can be into new realms.

The creation of these drawings required an immense amount of labour. Unlike pressing a button, making a drawing is a commitment to spending a certain amount of time with a particular image on a sheet of paper. I deliberately chose to work with ink – working with, in a way, the GAN produced imagery that slightly painterly – but also because it is a material that has randomness already inherent in the medium. It is very difficult to control and the differences between pictures of the same scene are amplified. It is also unforgiving, goes everywhere and escapes. You end up getting traces of humanness – fingerprints – that seem somehow out of place in a digital work, but I think are so important to have.

By deciding what to include in my training set, there was also the chance to take a certain amount of control. By restricting the training set to the first four minutes of the film, I was able to control to a certain extent the levels of ‘correctness’. There are moments when it is incredibly good – at the start, in the sections where I have given it lots of moments of reference – but repeating and remembering leads to misremembering and as the film progresses, the information starts to break down. There are no training images so the programme is having to construct every frame from what it already knows leading to uncanny, eerie moments. Sometimes this works, sometimes it does not. Claude Shannon, the inventor of information theory, writes about the minimal amounts of information needed for understanding how “familiarity with words, idioms, cliches and grammar enables us to fill in missing letters in proof reading and unfinished phrases in conversation”. Familiarity with cinema and classic Hollywood, which have their own cliches and idioms and grammar allows the viewer to grasp hold of and understand meaning. Memory becomes part of the material that is needed to understand the work.

But this idea of control is misleading – it is impossible to predict what will come out in each of the stills – I can guess but I cannot know. The errors and choices that are made when drawing are amplified and the GAN holds a mirror up to my own drawing and makes me realise things that I was not aware of: what I find the most important, what I always edit out. It showed how I draw things like eyes and eyebrows incredibly similarly, and so it becomes confused between these two things. There is a chair that appears and disappears because sometimes I remembered to draw it in and sometimes I did not. The GAN copies me, learning the human-ness and mistakes that come as part of this process. It is loser and wilder than I ever would have been able to make myself. Making it reminded me of Tlön, Uqbar, Orbis Tertius, a short story by Borges, is an account in part of a fictional country of Uqbar where “the duplication of lost objects is not infrequent” but these duplications – hronir – “were the accidental products of distraction and forgetfulness” (my missing chair, lazy way of drawing eyes) “no no less real, but closer to expectations” but which over times and cycles become comes “a purity of line not found in the original”. It is my work but also not my work – recognisably me but nothing I would have been able to do by myself. Watching it is a very odd sensation like catching a glimpse of yourself in a mirror before you realise it is you.

Which leads of course to the question of where the creativity lies in this piece – is it me creating the training set, is it the GAN producing the image? What is the “real” art? a bricolage artist combining existing things or someone with memories and ideas trying to convey them to an audience through a new medium? Drawing is both a noun and a verb – it is a thing and and action – but while a GAN might be able to produce a drawing, I’m not sure if it can draw – the process and decisions that I described earlier. How it constructs an image is fundamentally different to how I construct an image. There is the intention that I brought to the piece – my choices and decisions and drawings, and also, I suppose, thinking – and for me, the training sets are key to this. The relationship between me and the GAN is a lot stronger than if I had just trained a model with a database I had found. It knows my style. Anyone who has access to it also has access to a bit of myself. I do not see a GAN as a tool like I would think of say a photoshop filter but neither would I see it is as true creative partner. I’m not really quite sure what is is. There is a long history of artists workshops – from the renaissance to Andy Warhol, so maybe for me, using a GAN falls into this category. It can copy and suggest but ultimately it starts and ends with me. I am not that worried about painting machines or GANs that make what is essentially wallpaper, art without intent. I am not interested in trying to get a machine to draw like a human or produced something that is imperceptible as being machine-made. Digital art can sometimes seem as if it trying to take the raw messy world and convert it into something shiny and robotic. I am interested in the opposite: how to take something cold and sterile and algorithmic and reintroduce the human back into it, and have found by using artificial intelligence in this way – a combination of a material and a process – maybe a way to do it.